Code Agents in the IDE? Yes please!

Why now?

We finally have real competition in IDE code agents! I’ve been using ChatGPT models for a few years now (since 2020) with a Plus subscription on the desktop app and on my phone. It works for most stuff and now with GPT-5 it’s been incredible for coding, but context-juggling is an issue. You bounce between your IDE and a chat window, copy and pasting files, code segments and logs back and forth.

In my opinion, every developer can benefit from using a coding agent nowadays. I'll take you through what I've been recently using and you can pick something to try for yourself if you want.

My Setup

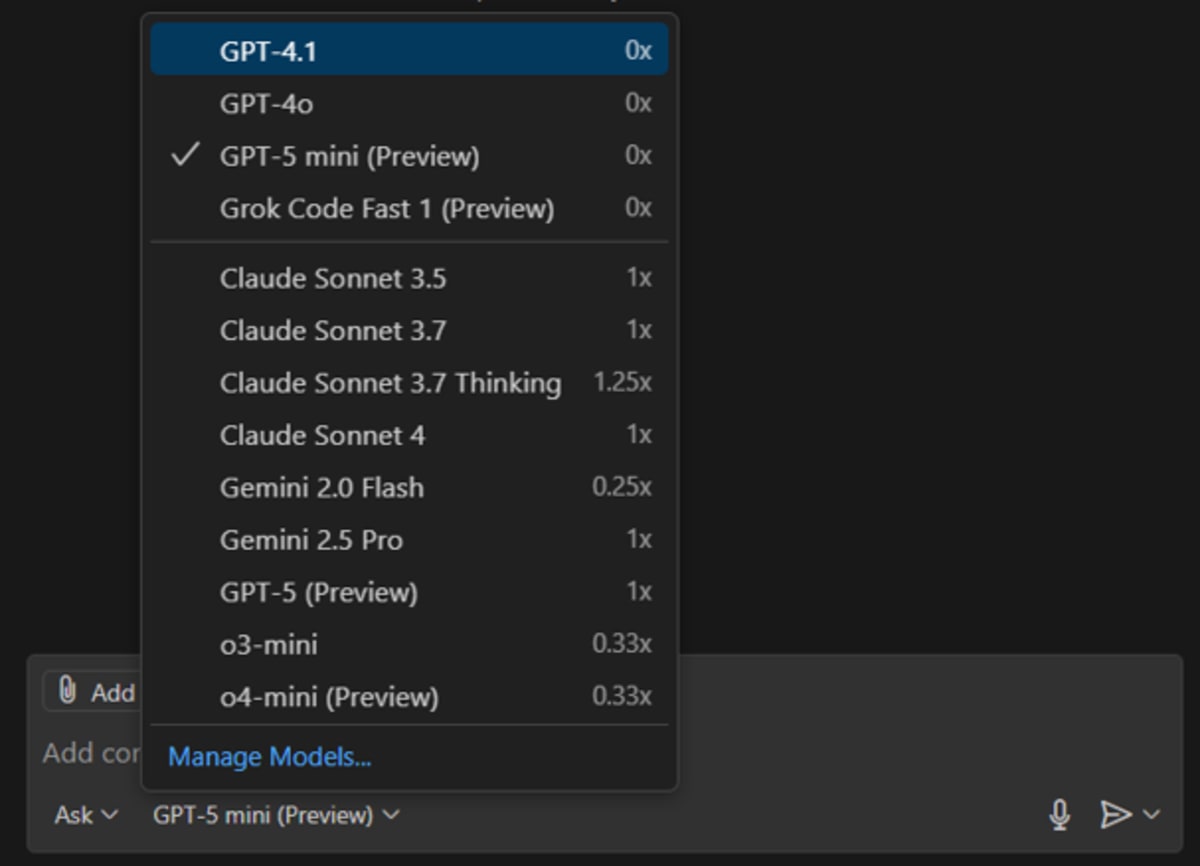

I am a VS Code IDE user. Over the last year I also started using GitHub Copilot, an AI coding assistant which I can have within VS Code, with my university email, which gives me Copilot Pro (normally ~$10/month) for free. This allows me to use chat/agent mode with different models (e.g. Claude Sonnet 4, Gemini 2.5 Pro, and recently GPT-5). If you’re eligible and want to, you can apply here: [GitHub Docs]

Since GPT-5 landed this month, Copilot’s agent mode has been way better, so it became my default. Claude Opus 4.1 is sadly on Pro+ ($39/month), so I was mostly comparing against Claude Sonnet 4, GPT o3-mini-high and Gemini 2.5 pro before GPT-5 took over.

With GPT-5 on Copilot, I became more productive and efficient with my side projects as well as my day job tasks until I hit Copilot’s monthly limit within 2 weeks. Well, time to diversify. What else can I run inside my IDE?

I ended up setting up three agents: Gemini CLI, Codex, and GitHub Copilot. For context, I only pay for ChatGPT Plus (~€21/month).

Side note on Claude Code CLI: I’ve bounced between subscriptions (ChatGPT, Gemini, Claude) over the years. Claude’s limits used to be a constant issue for me, and I’ve seen reports of folks racking up high bills. As a result I don't use Claude Code. I was already satisfied with GPT for daily work and code so I stucked to it and on the side used Claude Sonnet 4 with Github Copilot.

Let’s take a look at the the three agents I’m actually using.

Gemini CLI + VS Code “Gemini CLI Companion”

What it is: Gemini’s open-source AI agent that lives in your terminal. Official launch was June 25, 2025. Free, open, very active project. It had a rough launch but recently 0.2.1 was released and I already saw that just today we have 0.3.0 in preview and I must say that it is been getting better, fast. [Gemini CLI]

Generous free tier when you login with a personal Google account: 60 requests/min and 1,000/day. I haven’t hit the wall yet. [Gemini CLI Github]

Access to Gemini 2.5 Pro with a 1M-token context window (handy with large codebases).

It’s open-source (GitHub repo), moves fast, and publishes weekly preview/stable releases; as of Aug 28, 2025, we are in 0.2.1 release and just today 0.3.0 preview build is rolling.

New VS Code companion extension (mid-Aug 2025) adds inline diffs and editor awareness which is amazing. [Google Developers Blog], [Visual Studio Marketplace - Gemini CLI Companion]

How I’m using it:

I keep Gemini CLI as my third option: When I bump into Copilot/Codex quotas or when I’m in a huge codebase that benefits from the 1M context window. If you want the full command options, feel free to check the docs. [Gemini CLI]

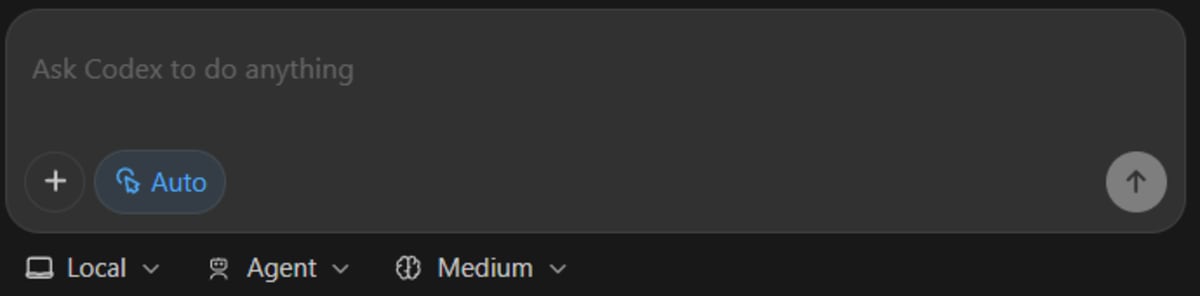

OpenAI Codex CLI (now with IDE integration)

Where it started:

Codex Web appeared first (May 2025), running in cloud sandboxes connected to your GitHub repos. It was an interesting context but unusable for me at the time since I had my thesis setup on a university HPC cluster, so the environment friction slowed me and made the tool useless. [OpenAI]

What changed:

Codex CLI now runs locally and is included with ChatGPT Plus (and higher). You can sign in with your OpenAI account. [Codex Github]

There’s a native IDE extension (VS Code, Cursor, Windsurf) so you can pair with Codex side-by-side in your editor. [Codex]

Usage Limits (Plus):

I couldn’t find a precise public “hard cap” online, but OpenAI’s help center guidance says typical Plus users get roughly 30–150 local messages per 5 hours (with a weekly limit which is not disclosed), and more for higher plans. I didn't have an issue with hitting the limit at all yet, I'll see how it goes. [OpenAI Help Center]

How I’m using it:

Early impressions are solid. I plan to run it in parallel with Copilot and see which one I like the most to use primarily.

GitHub Copilot

This has been my primary coding agent the longest (publicly rolling out since 2022). The big upside is multi-model support across vendors with a clean UX and agent mode. [GitHub Copilot]

Plans & limits (individuals):

Free: 2,000 code completions/month + 50 premium (chat/agent) requests.

Pro: Unlimited completions, up to 300 premium requests/month (then $0.04/request). Free for verified students/teachers/maintainers, this is what I use.

Pro+: 1,500 premium requests/month and full model access (includes Claude Opus 4.1).

It is worth noting that Github Copilot uses the models rate pricing for the premium requests: [Usage Rate]

Models:

You can switch models per chat/completion (GPT-5, Claude Sonnet 4, Gemini 2.5 Pro, etc.), and Copilot documents model selection and comparison across clients which is one of the most advantageous features for Github Copilot.

Context windows:

Practical context in Copilot Chat depends on the client and model. From what I found, GitHub has publicly noted 64k context window with GPT-4o in late 2024 where the normal context was 128k. Bottom line: don’t assume model-max (e.g., Gemini’s 1M) carries over inside Copilot. I am still unsure weather the alleged 200k context window of GPT-5 with Codex CLI carries over to Copilot. [GPT-4o Github Copilot, GPT-5 Context window]

How I’m using it:

This is still my main coding agent. The ability to hop between “big” models for premium requests and “smaller/faster” ones for unlimited completions is practical. I’m experimenting with Codex + GPT-5 locally and I think I'll stick to it for some time.

Comparative reflection (a.k.a. “when I reach for which”)

Gemini CLI + Companion: The 1M context and generous free quota make it a perfect backup/alt and great for large repos.

Codex: Since I’m already paying for ChatGPT Plus, it’s a no-brainer to use Codex alongside Copilot. With GPT-5 as the default, It's been great so far.

Copilot: Multi-model flexibility, free pro subscription if you're a student. A caveat: Gemini 2.5 Pro’s 1M doesn’t translate 1:1 inside Copilot. I assume this is the case for other models as well. I’ll keep Copilot for Sonnet 4 and GPT-5, while seeing how Codex compares.

Conclusion

Agents inside the IDE absolutely boost productivity, but only if you scope prompts and review diffs cautiously. It still annoys me that often the agents will go ahead and replace big chunks of code creating large diffs even if your prompt is specific on what course of action they should take.

I’ll use all three: primarily Codex and Copilot, with Gemini CLI as my flexible third option.

I’ve used them on Windows and WSL without any issues.

All three support project-aware instructions/config (e.g.,

.gemini,AGENTS.md,.github), but I did not use them yet. I prefer thorough planning in chat first, then small, targeted edits.

I haven’t tried any other features the agents provide such as their MCP servers integrations.

If you've found that project-aware instructions/config boosted the models performance, used MCP servers and have useful use-cases or have any additional useful information about coding agents to add, feel free to share it with me.

I’ll keep experimenting with all three coding agents and see how it goes. If this nudged you to try a new coding agent, then the post did its job. I hope you enjoyed the post!